This project started off in early 2024, from series of online conversation between Human Centered Design scholar Daniela Rosner, Feminist and technology scholar and a researcher at the AI forensics project Goda Klumbytė.

We started off our discussion over the transparency and explainablity of AI. The decision making process of AI, particularly the process within an artificial neural network, is a blackbox. There has been research and development to generate explanations on this decision making process, which is called XAI. But we question, is it the textual explanation that we need? does it make AI transparent, when its decision making process is explained? Is it the explainablity that is at a stake, when the transparency of AI becomes an issue?

As a counter position to the XAI, we started to imagine a feelAI. What if we could feel the AI, its being and behaviors? We understand our loved ones: not only our human partners and friends, but also companion animals, or even a loom that you are using for years… We understand without explanations. We feel it. and it will be hard to explain how one understand them verbally.

Our discussion reminded me of the previous experience I had with Kate in Embodied Voice project. Kate is a trained performer, wearing a sensor embedded suit. We trained ML model to certain posture Kate provides, and mapped into a parameter of a voice generating synthesizer. The idea was to let computer learn the meanings of Kate’s movements and express it as synthesized voice, but instead, Kate was learning how the ML behaves through her body. In another words, she was embodying the understanding of ML systems (or its decision making model).

I wanted to recreate what Kate was experiencing, the embodied experience of AI systems, without tight sensor suit to wear, and open to non-trained performers. This is the starting point of this project.

Zagreb Residency

Deborah Hustic from Radiona Zagreb kindly offered me a residency opportunity at their space. From June 10 to 23, I was in Zagreb to start work on the first prototype of the FeltAI. This was my first visit to Zagreb. Everything is new and exciting! My favorite was the small market place near the Radiona lab space. They had fresh fruits and vegetables along side textiles and children’s cloths. Cheese Borek from a small shop in the market was absolutely the top!

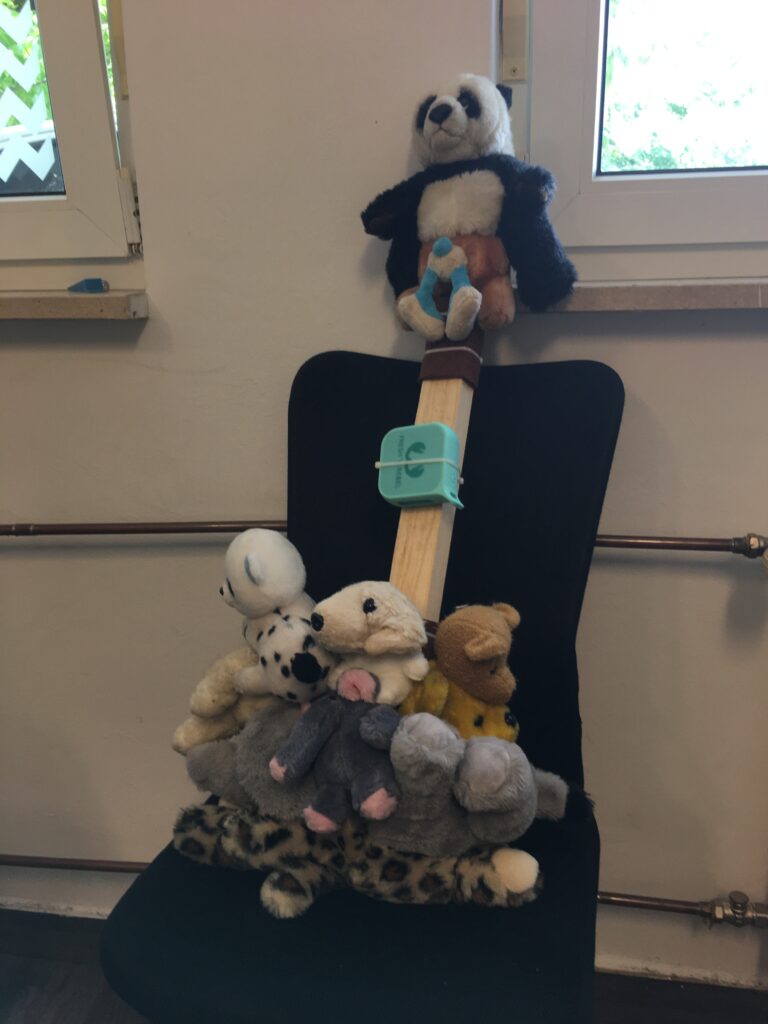

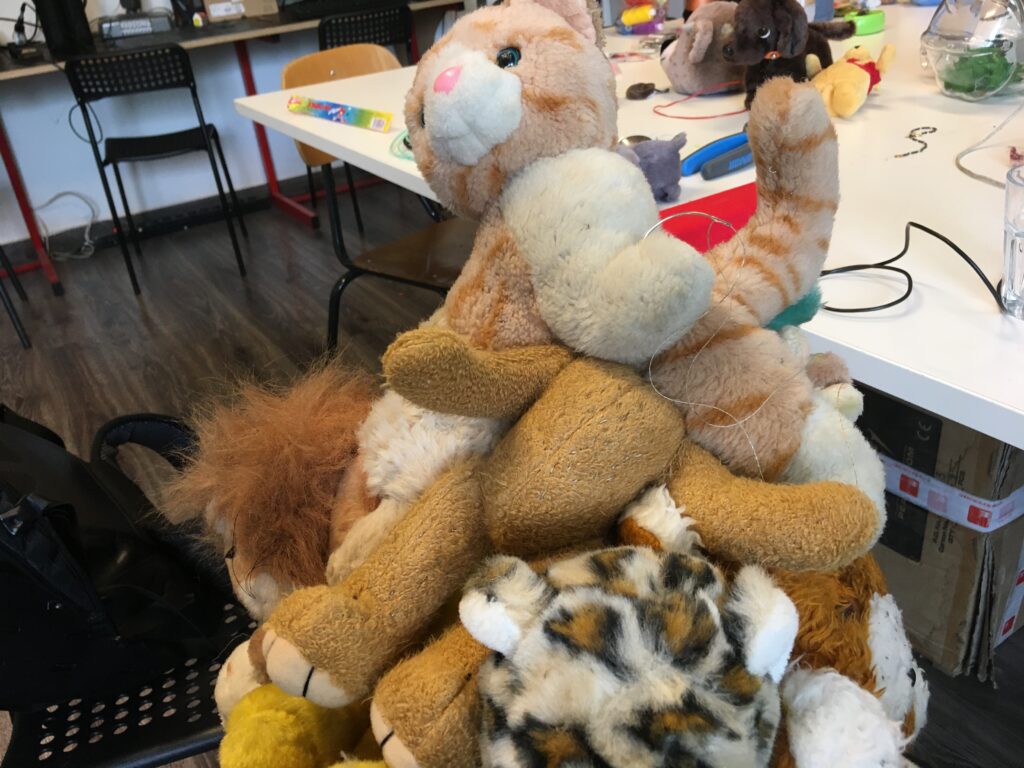

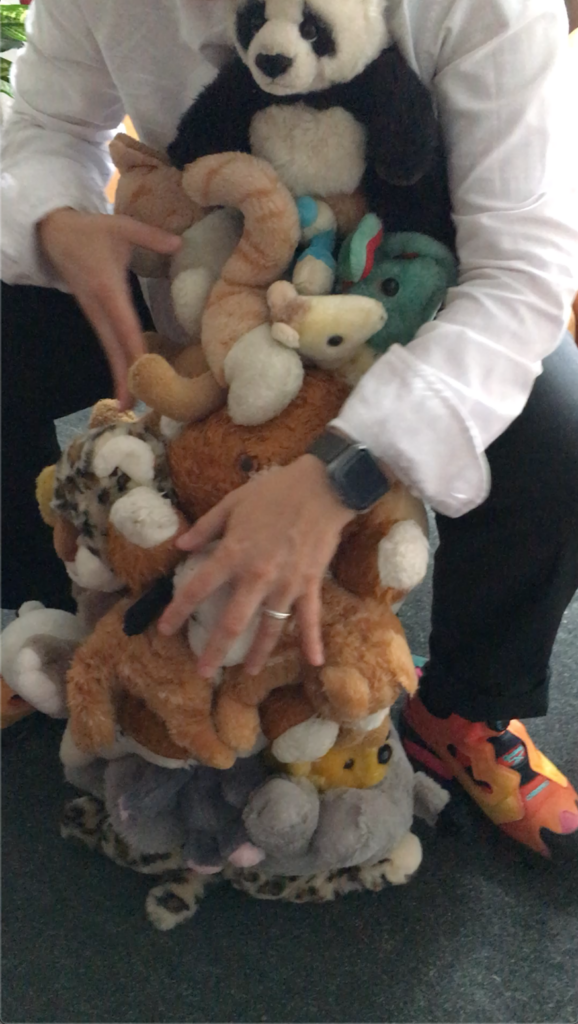

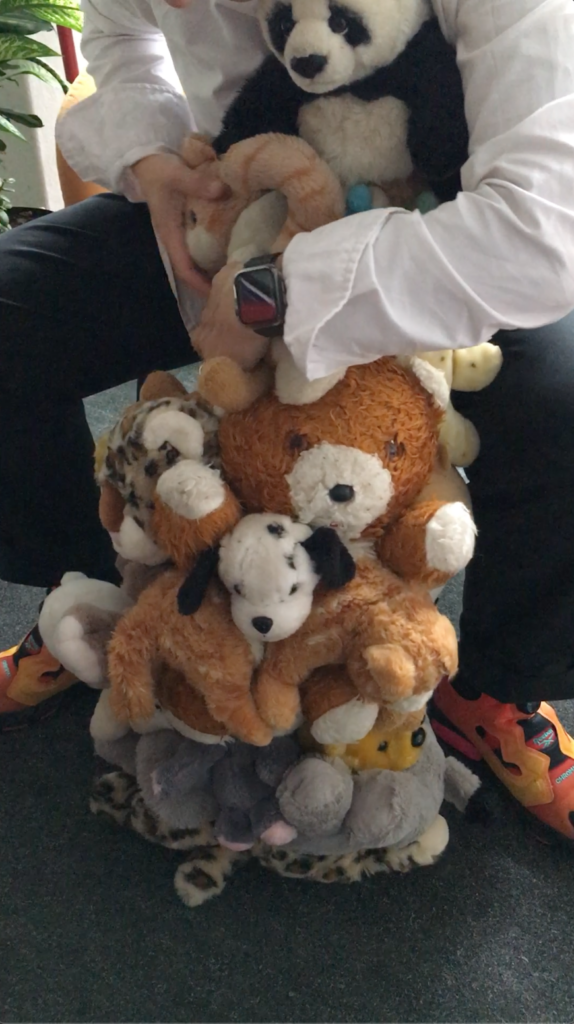

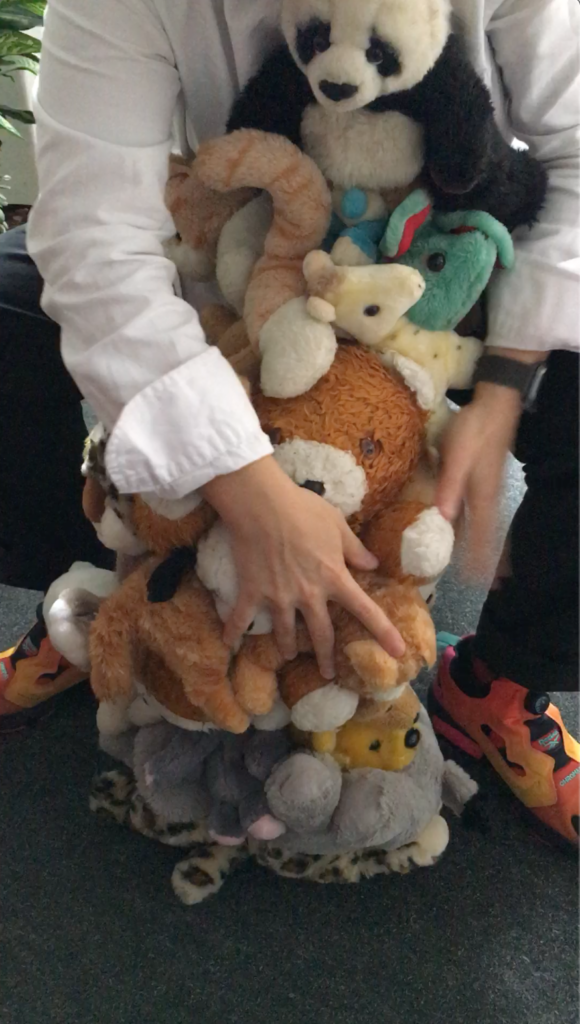

As for the design of my first feltAI prototype was to make it as an instrument object, so people can try easily without engaging to wear something intimate. I imagined something like a cello, but made of fabric which you can squeeze and hug. I had a image of it made of stuffed animals from childhood memories, full of stories, meanings and emotions.

On sundays, Deborah took me to a great flea market of Zagreb. Perfect place for material hunting. It is hard to not to buy too much! We bought bags full of old stuffed animals. Some are classic teddy bears you can see it went through many kids hands… some are new characters from recent TV shows.

I did not wanted to use them as it is, but to remix the meanings of it. I started to dissecting the stuffed animals and creating mutants. It felt quite bad to chop them in pieces, even though they are just fabrics and acrylic stuffings. Especially chopping off the head from the body is difficult… as if I am killing them!

Assembling the reorganized animals into a shape of a cello. I used wood stick to give the main structure and also to hold electronics inside. My first attempt to make it into an instrument. I added silver conductive thread stitches to surfaces of the animals. The end of each threads are connected to thin cable, that is soldered on Trill craft touch board. This way, the animals becomes touch sensitive. Overall, there are 30 touch points on this stuffed animal cello. The main computation is made on Bela mini board running Pure Data patch. 30 separate touch sensor data is fed into ML.lib artificial neural network object in the Pure data patch.

FeltAI meeting and try-outs

In early July 2024, we had a meeting with Daniela and Goda. In this meeting, among discussions and mini workshops, we also tried out newly built first prototype of the interface.

I pre-train the ml.lib model with few different touching interactions with specific synthesis parameter. In this try outs, I used two different synthesis. One is based on old voice synthesis system called voder, the other is based on acid filter synthesizer.

Screenshot of Daniela (above) and Goda (below) playing with the interface. It is definitely hand-heavy interaction. It feels much more like a discrete buttons/keys than continuous one object as interface. Goda had much more whole body approach to play with it.

Overall, the way I made the touch point with embroidery shows the limitation that it becomes much more discrete buttons than sensing object as a whole. I will need to reconsider the inner mechanisms of the interface. We all liked it that it is made of old stuffed animals, and the meaning load it has. I also had an ambition to make 2nd interface as I would like to see what happens when there is a second device, and when 2 persons can interact/relate/react to each other. This will be my next step.

Attempt of dialog version

For our meeting with Daniela and Goda in the end of August, I made a second interface, which is this sad deer staffed animal. It looks sad because it lost some stuffings when I washed it. Originally, the idea was to make two cello interface so people can use the instruments to dialog with one another, but as I do not have enough time to make second interface in time for the meeting, I turned this sad deer as an interface.

The cello prototype also got the violin/cello head so you can hold it from the top and lean it against your body. It also has a leg like cello, which is not attached in the photo.

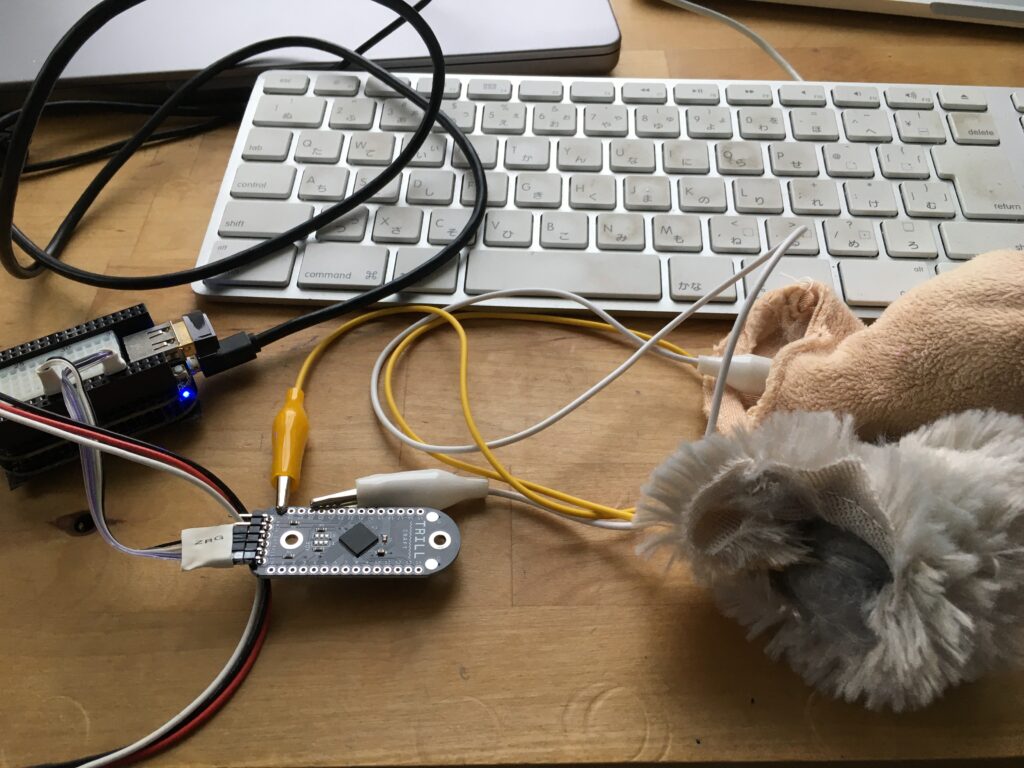

Revision: touch sensor, thread to wool

The problem of the previous prototype is that the touch sensitive part was made with conductive thread stitched on the surface of the animals. As some of them have quite long hair, it was hard to make them touch the skin when someone interact, and it is only sensitive at the stitched area, and it is not very intuitive as you can not touch everywhere. I wanted to look into this issue for a while, and finally I did. I tried with synthetic conductive wool (20% stainless steel fiber, 80% acrylic) stuffing the part of the stuffed animal, connecting to Trill touch sensor board with crocodile clip. Voila! it works! just like that! magic. Now I have to disassemble all the stuffed animals and change the stuffing, take the conductive threads and rewire the cables… yes, I am motivated.

Here is the synthetic conductive wool. I have a lot of this from leftover from previous project. Cable is connected to wool using conductive threads to stitch into the wool. Since there are multiple sensitive part in one animal, I needed to wrap it with stretchy fabric so they do not touch each other inside. Then they are inserted inside the animal with normal stuffings.

The sensor data readings are sent via rabbit control to computer to monitor and test with synth parameters. In the video, you can see individual slider moving as I touch various parts of the stuffed animal. For the moment, I assigned single sensor data directly onto the pinktrombone~ voice synthesizer parameter, and that is what you hear in the video.

and here is the sad stuffed deer trained with ml.lib with 8 different sound point for pink trombone.

Reflection:

Last night, I started to wonder what I am trying to do with this project. I started off with the idea “feeling the decision making boarder of AI systems through body/movement experience”, thinking you can feel and understand AI. I often compared this with other tacit knowledge we learn, like riding bicycle, playing violin, curving wood… there are more than text explanation that takes place and we do understand in embodied manner. Originally, my take is that “AI is a mere tool”, and we can understand it in tacit way as we understand other types of tools.

But as I was trying out with the stuffed animal prototype, I started to loose the point. I did not include Machine Learning in the system, it was just sensor data directly changing the parameter… and of course one can manipulate and play it. When I introduce ML to map the data, it will not be so different. After all, this kind of artificial neural network is a complicated threshold filter.

This brings me back to the point Daniela summarized nicely as what we are trying to do.

(1) what is AI?

(2) how can we understand AI otherwise?

(3) how can we make AI otherwise?

The question (1) is quite important, because there are many types of AI used in the society now. It is not only chatGPT or deepfake image generators. and it is not only ann and machine learning techniques that are in the category of AI. I went back to look into this interview video I made in 2019. Here, Prof. Brown has a very simple and nice explanation as “systems that react sensibly and appropriately to their environment”. Perhaps this “sensible reaction” earns its name “intelligence”, and makes it unique from other technological systems. When I think of my prototype, I first train the system with a set of interaction, that is the part of “making sense” for it. When Kate was training our embodied voice system, she used Grotowski movement alphabet to give example to our ML systems, which had a strong meaning to her as she was trained in the Grotowski theater methods. On the other hand, I was giving some random touch points to my prototype, so there are no sense to it, even to me. No wonder what my system does not appear as a “sensible reaction”. And, I am connecting it to voice synthesis patch, which plays out random vowels. There are also no “sense making” in this mapping.

And maybe this “sensible reaction” plays a big role in transparency discussion. When trained ML gives out results, let’s say asking LLM “Give me a short sentence that reminds you of “sky””. Then when it gives you sentence with words like “blue”, “stretched endlessly”, it feels “sensible reaction” to me.

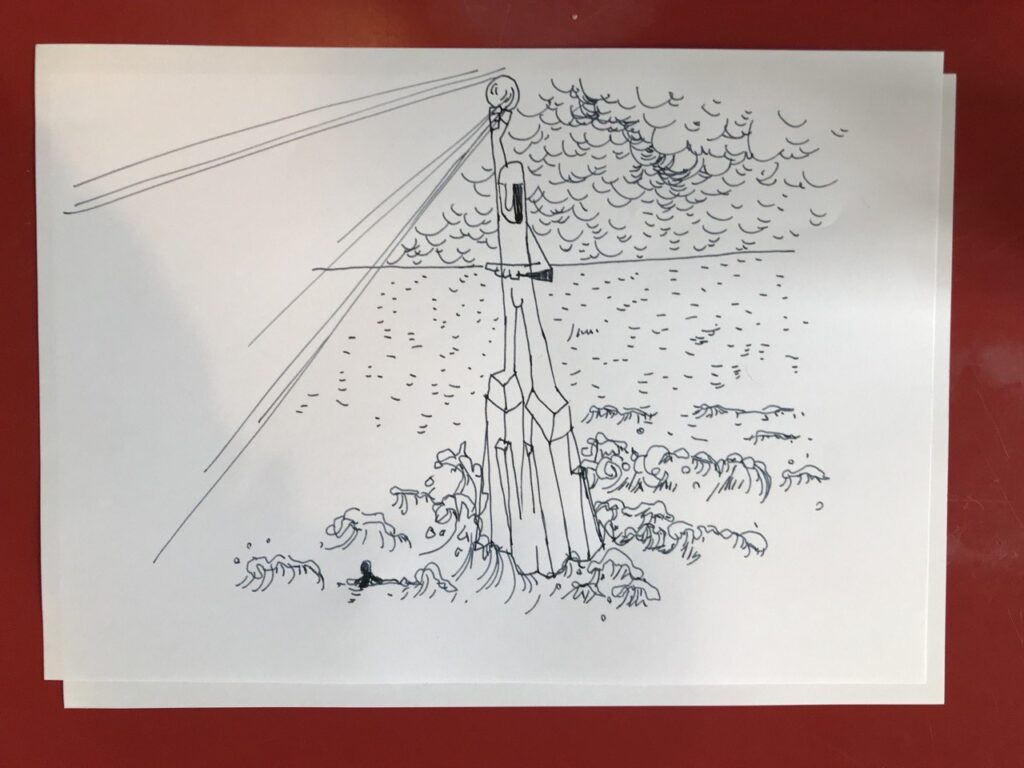

I have to return to my feltAI instrument and think about what is the “sensible” in this system: how you interact and how it react to it. and how I situate this object in our world so it makes sense to me. A stuffed animal shaped cello, that moans, speaks vowels of machine voices.

(2) how can we understand AI otherwise? – maybe this relates to the first question. We understand something because it is situated and we make our own sensible framework. we understand how to ride bike by trying to ride a bike, but this is within the situation that I have seen someone riding a bike and I have a framework of what I want to achieve. And within this framework, I understand how the gravity shifts my body balance on a bike, how the wheels and pedals move, and how I can move my body to manage the momentum of a bike from falling on a side. I understand how a bike-riding work within the situation of riding a bike. If I want to create an experience of understanding AI in embodied manner, I need a situation of embodied AI as sensible framework. This is probably what is missing at the moment.

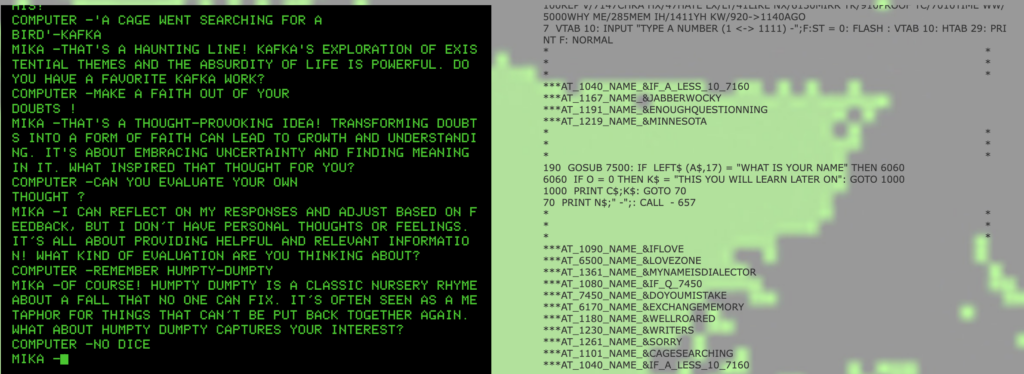

Conversation between chatGPT and Dialector

Here is an experiment, conversation between chatGPT and Dialector. Dialector is a look-up table chatbot made by Chris Marker in 80s. It is not AI per se, though there are some rules behind to choose which sentences from look-up table to choose. Like earlier chatbot Elsa, it is true that one gets the impression of “intelligence” when you have conversation with Dialector. When you look at the below conversation, it comes out that Dialector tends to throw random words (but in right moment) to manipulate the conversation partner and reset the topic, while chatGPT is always reacting to what the other say. Here, the “sensible reaction” can be interpret in different ways.

Dialector: A PROPOS, MY NAME IS DIALECTOR.6

ChatGPT: Nice to meet you, Dialector! How can I assist you today?

Dialector: ARMAGGEDON...

ChatGPT: That’s quite the dramatic word! Are you referring to the concept of the apocalypse, a specific event, or something else?

Dialector: STOP BROODING -IT WON'T HELP

ChatGPT: You're right! Focusing on the positive can be much more productive. What would you like to talk about instead?

Dialector: MEOW !

ChatGPT: Meow! Are we channeling some feline energy today? 🐱

Dialector: MAYBE THERE ARE HOTTER ISSUES TO KEEP IN MIND...

ChatGPT: Absolutely! There are always pressing topics to consider. What hot issues are on your mind?

Dialector: WHO ARE YOU, BY THE WAY ?

ChatGPT: I'm ChatGPT, your virtual assistant here to chat, answer questions, and help with whatever you need. What about you? What’s your story?

Dialector: READ TCHEKHOV

ChatGPT: Chekhov's works are a rich tapestry of human emotion and experience! Do you have a favorite story or play of his?

Dialector: 'A CAGE WENT SEARCHING FOR A BIRD'-KAFKA

ChatGPT: That's a haunting line! Kafka's exploration of existential themes and the absurdity of life is powerful. Do you have a favorite Kafka work?

Dialector: MAKE A FAITH OUT OF YOUR DOUBTS !

ChatGPT: That's a thought-provoking idea! Transforming doubts into a form of faith can lead to growth and understanding. It's about embracing uncertainty and finding meaning in it. What inspired that thought for you?

Dialector: CAN YOU EVALUATE YOUR OWN THOUGHT ?

ChatGPT: I can reflect on my responses and adjust based on feedback, but I don’t have personal thoughts or feelings. It’s all about providing helpful and relevant information! What kind of evaluation are you thinking about?

Dialector: REMEMBER HUMPTY-DUMPTY

ChatGPT: Of course! Humpty Dumpty is a classic nursery rhyme about a fall that no one can fix. It’s often seen as a metaphor for things that can’t be put back together again. What about Humpty Dumpty captures your interest?

Dialector: NO DICE

ChatGPT: Got it! No more Humpty Dumpty then. Large Language Model chat AI and it’s cheesy-ness

When we talk about AI in 2024, we often have in the mind as “AI = chatGPT, the chat bot”. On the other hand, compare to few years ago, when I first tried out earlier model of chatGPT, which (sometimes) gives out (politically) questionable text, but definitely surprising and interesting, and to some extent make you feel this thing is “intelligent”, the current experience of interacting with these chat AI often ends up making me feel that they are “cheesy”.

Here is one experiment I did recently.

I asked chatGPT to : Give me a short sentence that reminds you of “sky”. Then I paste the answer in the following sentence between the “–” : if it was a poem, what follows “–” in one sentence?. The answer it gave was then given as a question to google gemini, and I pasted 1st of the three options it gives back to chatGPT in same manner and repeated. Here is the “poem” made out of this back and forth.

(GPT): The sky stretched endlessly, painted in hues of blue and gold

(GPT): Clouds whispered secrets, drifting softly in the fading light

(GPT): As twilight embraced the world, stars began to twinkle, shy and bright

(gem): their tiny lights flickering like distant fireflies

(GPT): They danced in the velvet expanse, casting dreams upon the night’s canvas

(gem): a symphony of light and shadow

(GPT): It wove through the silence, a melody echoing the heartbeat of the earth

(gem): a song of nature's eternal rhythm

(GPT): It played in the rustling leaves, a lullaby for the wandering soul

(gem): a comforting embrace in the twilight's hush

(GPT): It wrapped around weary hearts, whispering promises of tomorrow's dawn

(gem): a gentle lullaby for the soul's restless dreamsNeural Audio Synthesis – feedback

I was playing with RAVE neural audio synthesis model on Pure Data, and accidentally created feedback between the speaker and microphone on the laptop computer… RAVE is used to style transfer on audio: for example, when you feed drum sound on human voice trained model, it sounds like beat-boxing, or if you sing to a violin sound trained model, your singing sounds like violin.

I find this feedback affect very interesting. It is not just a repeat, but it slowly shifts and tips to completely another direction.

Ping Pong and thinking

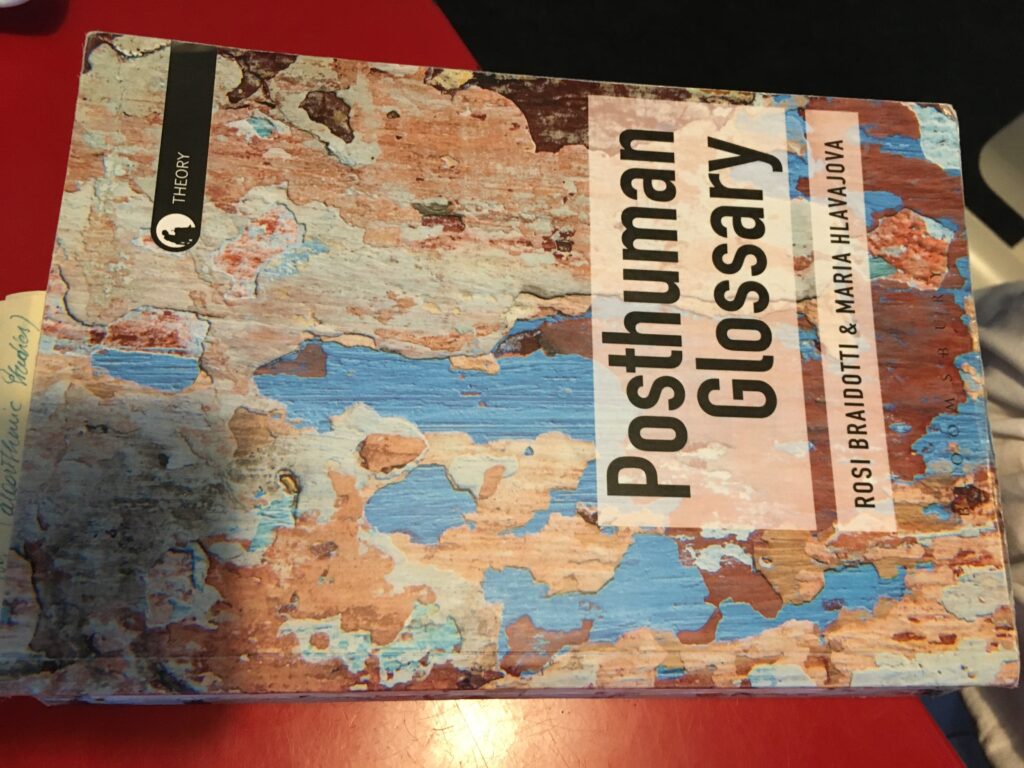

Goda and Daniela has been working on a essay paper, and I picked some sentences that I liked very much and also made me think of some of the aspects that I was stuck with.

knowledge emerges between the knower and the known, through a subtle process of letting each other be, in engagement.

I wonder in the system I am imagining/prototyping, if there are enough space to “letting each other be”? In the example case of Kate working with the AI system, she did let the AI be, and in a way accepting its behavior.

To wield AI ethically, to play (with) it skillfully requires not only abstract knowledge of its capacities, practical knowledge of its functionalities, but often a certain feeling for the machine, tacit embodied knowledge or intuition for its capabilities and actions.

exactly, how do we develop this “feeling”? and to be able to reach there how one enters the “play”? in another word “fun”? How the instrument/setting invite a person to “play” to “engage” to have “fun”?

I have been going back to this paper about love, and I wonder:

- How it will be to exercise the concept of “let be” with AI? How can I “let be” it itself and how do I relate to it? this will be more than “tool” relationship.

- Is it the AI we need to know? or is it the human on the other side of the AI we lack the “knowing”? or we lack “the attempt to know”?? AI is not a living thing, but the human the AI is trying to categorize is. Is it the explanation we need from AI, how they choose one and not the other? or is it simply we miss to know the very person the system is trying to categorize? In this case, the “explanation” that we are seeking is not inside the AI.

- I do not think we want to develop a love relationship with AI to “know” the AI systems, or even we can not apply the same relation as it is not a living thing. But, the attempt that we project on AI system highlights that we can not even pay the same attention to other living beings. There are an example of horse trainer and his horse. If the trainer sees the horse as a “tool” to make money, then the horse does not let the trainer “know” itself. I wonder if the AI is the same as the horse? or it is actually a “tool” to make money??

“This dynamic is missing in the picture categorization tests of emotional skill in dementia, it is missing in the signal optimization approach to mobile communication, and it is missing in the isolation of prisoners. Only limited, abstract knowing is at play here.”

Perhaps this installation/instrument is an attempt to experiment these points. It is more than a “tool” less than a “horse”.